By Frank1000 - Thu Apr 10, 2014 4:15 pm

- Thu Apr 10, 2014 4:15 pm

#379652

Hi, i’ve created a cardboard VR goggles for my smartphone, using its gyro to look around in a VR scene that i have produced with the Unity3D game engine. Works top, the stereo split screen delivers the correct image for each eye and you can turn around and watch the scene in 3D  )

)

Now the scene in VR is rendered in real-time, limiting its render quality for playback in a smartphone.

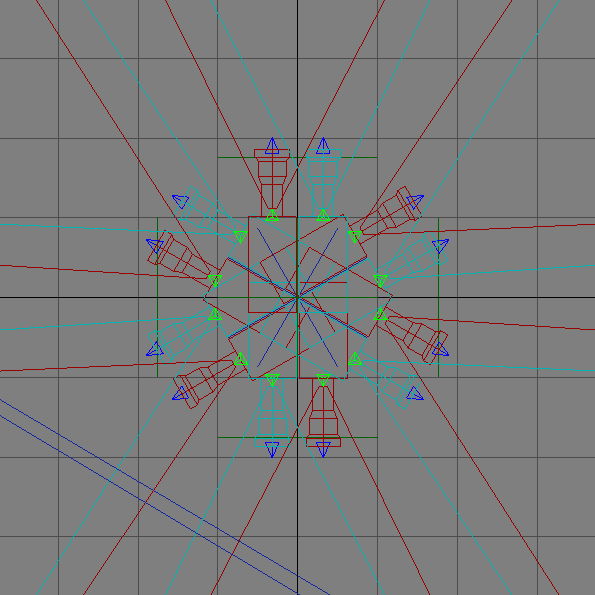

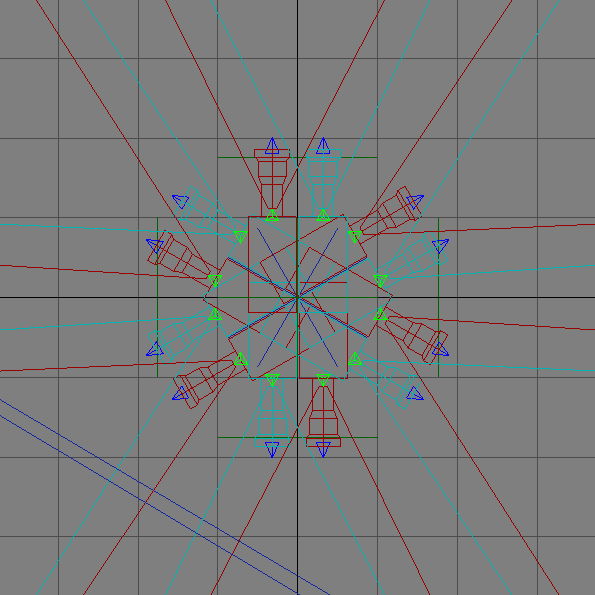

So i rendered a Maxwell scene with a multi stereo camera setup and separately stitched the pictures from the left and right cameras together. This is necessary, because you need to keep up the relation between left and right eye. 2 spherical renders wouldn’t work because camera constraint gets gradually lost the further you turn to the side.

Ok now the wish list part would be a maxwell render lens, where you define the numbers of stereo cams in a circle, their distance to the center and render the multi-cam spherical movies for left and right.

THAT’D BE SUPER AWSOME and puts everything far behind what you can achieve in visual immersion.

Any better than this would already be to either being at the location, or receiving Matrix-movie like spinal stimulation for simulation

So yea, and then there can be a pre-prepared Unity3D scene (i have one), where you just exchange the left-right movies with your production, hit build and put it online for everybody to install and watch on their smartphones with simple stereo-magnifier-goggles (like mine, or as they sure be available to buy for 2 $ soon), or Oculus Rift setup etc. in seconds. And BAM be in the movie scene !

Regards,

Frank

Now the scene in VR is rendered in real-time, limiting its render quality for playback in a smartphone.

So i rendered a Maxwell scene with a multi stereo camera setup and separately stitched the pictures from the left and right cameras together. This is necessary, because you need to keep up the relation between left and right eye. 2 spherical renders wouldn’t work because camera constraint gets gradually lost the further you turn to the side.

Ok now the wish list part would be a maxwell render lens, where you define the numbers of stereo cams in a circle, their distance to the center and render the multi-cam spherical movies for left and right.

THAT’D BE SUPER AWSOME and puts everything far behind what you can achieve in visual immersion.

Any better than this would already be to either being at the location, or receiving Matrix-movie like spinal stimulation for simulation

So yea, and then there can be a pre-prepared Unity3D scene (i have one), where you just exchange the left-right movies with your production, hit build and put it online for everybody to install and watch on their smartphones with simple stereo-magnifier-goggles (like mine, or as they sure be available to buy for 2 $ soon), or Oculus Rift setup etc. in seconds. And BAM be in the movie scene !

Regards,

Frank

- By Jochen Haug

- By Jochen Haug